Casino games often look simple on the surface; you press a button, spin the reels, and wait for the result. But there’s a complex set of systems underneath for creating unpredictable, statistically sound, and manipulation-resistant outcomes. At the center of that design is randomness.

What “random” means in software terms

Understanding randomness in casino games means learning how algorithms, probability, and software constraints interact to produce outcomes that feel fair while remaining computationally efficient. Casino games rely on controlled unpredictability.

Most modern casino software uses pseudorandom number generators (PRNGs). These algorithms produce long sequences of numbers that appear random, despite being generated deterministically from an initial seed value. The quality of a PRNG is measured by how difficult it is to predict future values based on previous outputs.

In casino applications, PRNGs are designed to pass rigorous statistical tests, ensuring outcomes are evenly distributed over time and resistant to pattern detection.

Why true randomness isn’t practical

True randomness, derived from physical processes like atmospheric noise or quantum effects, is expensive and slow to integrate, especially at scale. Casino games require millions of outcomes per second across thousands of concurrent sessions. That level of use requires algorithms that are fast, repeatable, and auditable.

PRNGs meet those requirements. When properly implemented and regulated, they provide results that are statistically indistinguishable from true randomness for practical purposes.

Separating perception from probability

One of the biggest disconnects between players and software lies in expectation. Humans are poor intuitive statisticians. We expect randomness to “even out” in short runs, when in reality variance is a defining feature of random systems.

This is why streaks, both winning and losing, are not evidence of bias or manipulation. They are expected behaviour in independent trial systems. Each outcome is calculated without reference to what came before it.

From a software perspective, this independence is critical. If outcomes were influenced by previous results, the system would no longer be random.

The role of regulation and verification

Because randomness is not visually observable, trust is established through verification. Regulated casino software is routinely tested by independent labs that audit PRNG implementations, payout distributions, and game logic.

These audits confirm that the mathematical models behave as advertised over large sample sizes, and that no hidden rules alter outcomes based on player behaviour, device type, or session history.

For developers, this creates strict constraints. Any system that introduces adaptive or player-responsive outcome logic risks failing compliance checks.

Where the player meets the algorithm

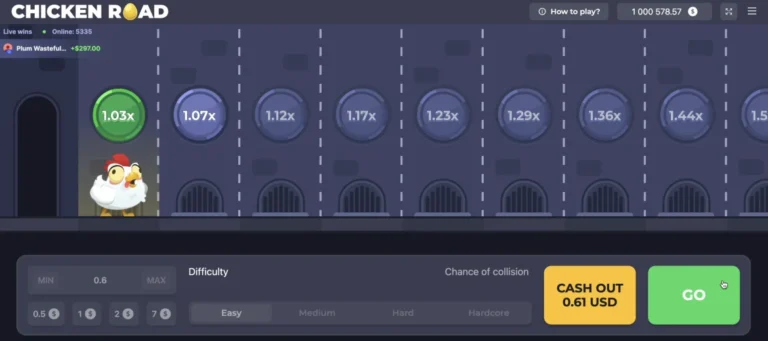

From the player’s perspective, the algorithm is invisible. What they experience is interface, feedback timing, and presentation. But every spin, card draw, or roll is the result of a number generated milliseconds earlier under the hood and mapped onto a predefined outcome table.

In that mapping layer, raw random values are translated into symbols, hands, or wheel positions. It’s also where misconceptions often arise, as visual outcomes can obscure the underlying statistical process.

Why understanding randomness matters

For anyone interested in how casino games actually function, randomness is the foundation. Without it, outcomes could be predicted, exploited, or engineered, breaking both fairness and trust.

Understanding how algorithms enforce randomness clarifies why games behave the way they do, why patterns are unreliable, and why short-term outcomes say little about long-term probabilities.

It also reveals something broader: casino software isn’t built to react to players. It’s built to ignore them completely.